Is artificial intelligence the future of assisted suicide?

A few years ago while binging on Elementary, I came across an episode where Sherlock Holmes is confronted with an ‘Angel of death’. The plot of course unravels in much more complicated ways than that; no spoilers. But once caught, the ‘Angel’ in his stern commitment claims that he was doing the patients a favour by relieving them from their agonising misery. And on the surface he is right. He was helping people with terminal ailments to pass on. People who were in excruciating pain. But the ethics of physician assisted suicide have been debated for decades and are as slimy as the mangroves of Sundarban. It is illegal in all but a few countries.

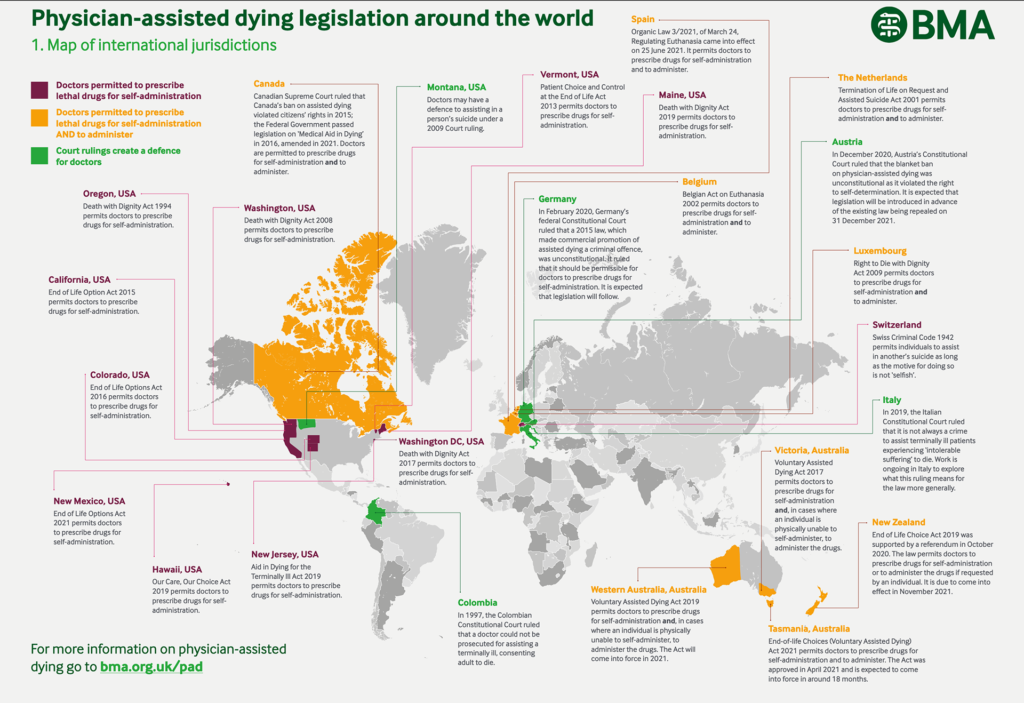

The World Medical Association, “…its strong commitment to the principles of medical ethics and that utmost respect has to be maintained for human life. Therefore, the WMA is firmly opposed to euthanasia and physician-assisted suicide.” And below is a chart by BMA; illustrating the legislation so far.

But that is a discussion for another time.

Then is AI assisted suicide the future?

In Assisted suicide on demand, Stav Dimitropolis claims that ‘With Exit International’s new Sarco suicide pod and experimental AI, the future of assisted suicide is ripe for disruption.’

He also suggests that “His [Nitschke] AI will assess a person’s eligibility for suicide using the guidelines suggested by a professor of law, health and ethics at the University of Sydney named Cameron Stewart, published in the Journal of Medical Ethics in 2011. According to them, a person must be able to comprehend and retain information on the decision to end their life (method chosen, risks of failure, impact of their decision on others included), explain why they reached the decision, demonstrate there has been no undue influence from others, and pass a cognitive ability test using the Mini-Mental State Examination (a widely used test of cognitive function, mostly among the elderly though).”

The article also suggests that “The AI that Nitschke and his team are creating can be completed online, in the form of an interactive program that asks the person a series of questions and assesses their responses, reaching a conclusion as to the mental capacity of the interviewee. In theory the way it would work is that, if the AI determines a person is eligible for suicide, it would then give them a code to activate the Sarco—a “free pass” to the other world. This would take in total less than 24 hours, but realistically, Nitschke is aware it could take many years for laws to catch up with the technology and allow a piece of algorithm to be able to decide who is fit to choose their own death. “In the beginning, we will still have people talk to a psychiatrist. If the person says yes, well, then we’ll say we can give you the code,” he says.”

The question though is — ”How does an AI define the right circumstances?”

I could not find enough text on the topic and it seems that the workings of this AI is hidden behind a thick wall. And in the absence of any legislation it is anybody’s guess where this is headed. But going by the number of media reports glorifying this technology it is a matter of time that intervention arrives.

What I have found disturbing through out my research into AI, whether for AI in therapy or other medical practices was the confidence that this is the ultimate solution. Yes, it is exciting, I can not disagree, but the truth seems to be that we are decades away from anything that might truly improve human lives. It also sounds like we are leaning further and further toward technological dependency to solve problems that require human compassion and humility.

Who knows what the future holds now.

Leave a Reply